BenchMark - Differences and tiny bug

Apologies for the length of this post, but I have sort of gone down a few rabbit holes with this.

It started when I was hoping to be able to used the BaseLine attribute in benchmark and so I was looking at the summaries that are returned from Benchmark.

I was able to get this information from the summaries (using the table object)

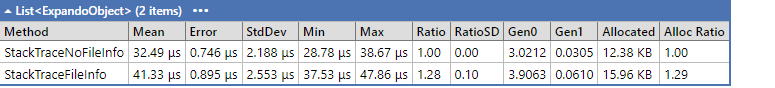

But the values are slightly different from LinqPad's live results.

Compare the last result which has 41.90 vs 41.33. (This is about 1.4% difference, but I have seen others where the difference has been almost 5% )

At first I thought I had done something wrong, but these values agree with the measurements from the summary and also agree with the values from the log file

So it looks like the LinqPad results are slightly 'wrong';

So looking at the Benchmark.linq code, I spotted what looks like a bug.

public double? MeanTimeNoMinMax =>

TotalOperations == 0 ? null :

TotalOperations < 3 ? ((double)TotalNanoseconds / TotalOperations) :

(((double)TotalNanoseconds - MinTime - MaxTime) / (TotalOperations - 2));

There are two issues with this. Easiest to see if we have a contrived example of just three measurements

1) This is testing TotalOperations < 3 when it should be the number of measurements < 3

2) The final line is subtracting different units (ns - ns/op) i.e. (218800-1.4709-3.0182)/(98304-2) whereas it should be (217800-48200-98900)/(98304-21768-32768)

At first I thought this might be responsible for the difference, but it turns out that the overhead is virtually irrelevant in the tests I have done. The overhead only affects a few hundredths of one percent and this error only affects a few hundred thousandths of one percent.

But parsing the log files for the WorkloadActual and WorkloadResults lines does explain the difference.The LinqPad LiveResults agrees with the average of all the WorkloadActual lines (again assuming the overhead if negligible) whereas the BenchMarkDotnet figures agrees with the average of the WorkloadResult lines.

So It appears that after BenchmarkDotNet has collected all the details for the workloads, it can throw some of them away on the basis that they are outliners.

Note the last job has six measurements that have been thrown away.

Not sure if there is anything that can be done about this or if it makes a real difference, (but presumably the BenchmarkDotNet team must think so otherwise they would not have gone to the trouble.)

For myself I can change benchmarkDotNet.linq file to dump the details of the various summaries at the end. Which finally leads me to the question. What happens if LINQPad is unable to automatically merge any subsequent updates into my modified script?

P.S.

The script I used to test this is at http://share.linqpad.net/vegu8d.linq

Comments

-

Good spot - it does appear that the default BenchmarkDotNet config removes upper outliers, by virtue of the following code in EngineResolver:

var strategy = job.ResolveValue (RunMode.RunStrategyCharacteristic, this); switch (strategy) { case RunStrategy.Throughput: return OutlierMode.RemoveUpper; case RunStrategy.ColdStart: case RunStrategy.Monitoring: return OutlierMode.DontRemove; default: throw new NotSupportedException ($"Unknown runStrategy: {strategy}"); }For consistency, LINQPad's benchmarking visualizer should query

AccuracyMode.OutlierModeCharacteristicand apply the same logic if RemoveUpper is returned.To answer your second question, should a merge fail, it will abort the update and your code will win.

-

Try the latest beta - it now reads the outlier mode setting from IConfig and matches its behavior:

https://www.linqpad.net/linqpad7.aspx#betaYou can elect to include upper outliers in the config via a new argument when calling RunBenchmark (in programmatic mode) or by editing the BenchmarkDotNet query.

-

That works great.

Thank you very much.